Complete AI Course on Prompting, Evals, RAG, and Fine-Tuning | Adam Loving (Meta)

A beginner friendly technical deep dive on building AI products

Dear subscribers,

Today, I want to share a new episode with Adam Loving.

Adam is an partner engineer at Meta who has helped 100s of companies build AI products. Our interview is a complete, beginner friendly AI course on how to use prompting, evals, RAG, and fine-tuning to build great AI products.

Watch now on YouTube, Apple, and Spotify.

Adam and I talked about:

(00:00) The 2 types of AI optimizations every PM needs to know

(02:52) 4 tips to craft compelling AI prompts

(07:14) 4 types of AI evaluations to consider

(12:06) The scoring trick for advanced AI evaluations

(21:50) Retrieval augmented generation explained

(27:29) Is fine-tuning basically lobotomizing your AI model?

(30:31) Now I finally understand vector databases

(41:40) Why Meta thinks open source will win

(44:04) Adam's best advice after leading 100s of AI integrations

How to optimize AI’s responses

Welcome Adam! So what are the main ways to improve AI responses?

Well, everyone should start with prompt engineering. Beyond that, you can:

Optimize the LLM’s context. That's what retrieval augmented generation (RAG) is for.

Optimize how the LLM acts and responds. That’s fine-tuning, which is like sending your model to specialized job training after college.

With fine-tuning, you're loading specific information and formatting rules into the model itself. In some sense, you're dumbing it down or making it more focused — constraining the large LLM to only answer questions in a certain way.

How to craft perfect prompts

Before talking about RAG and fine-tuning, what are your best tips for prompting? Let’s use an example of a Lululemon customer support agent to make this practical.

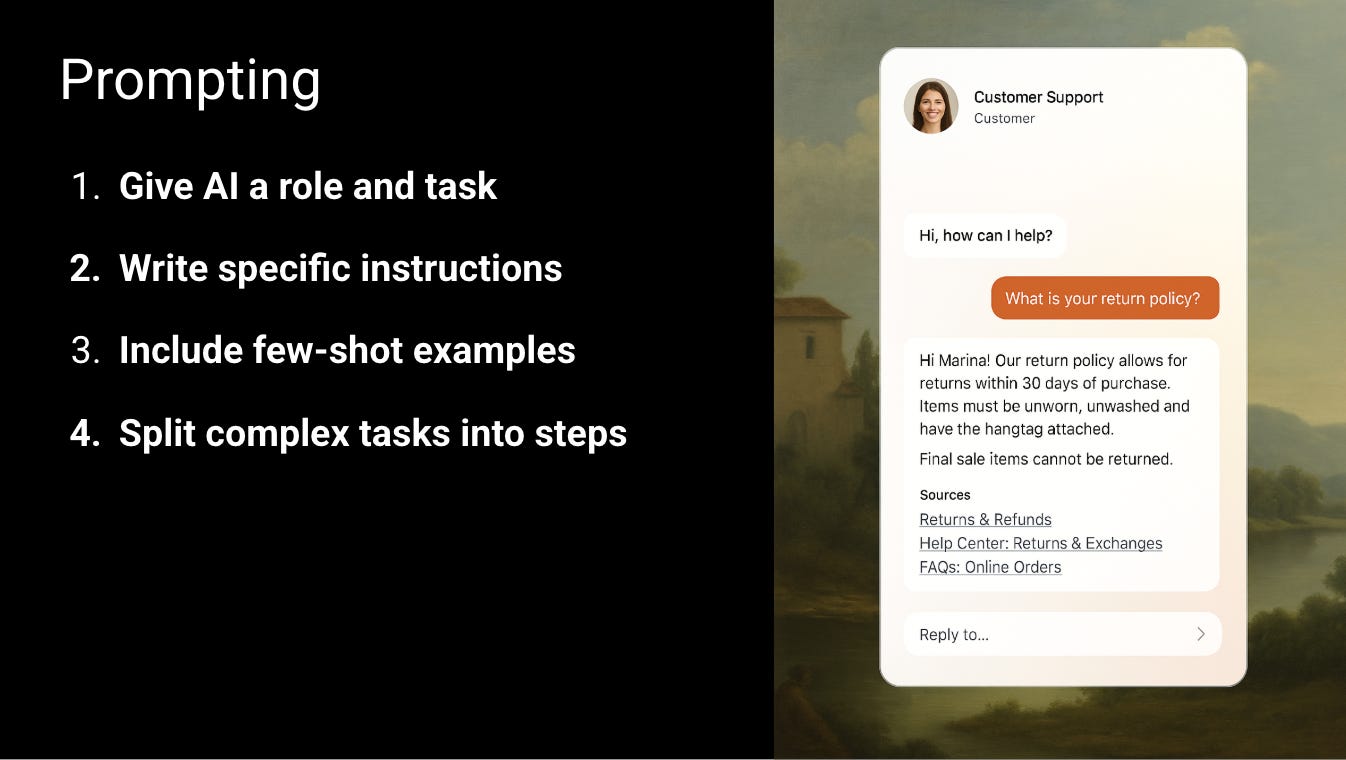

I think of prompting as sculpting. You start with a LLM that knows a little bit about everything. Then you feed it more details about your world and give it specific instructions. So my top tips for prompting are:

Use few-shot examples. Give AI specific examples of good responses to guide its behavior.

Force step-by-step reasoning. If you’re using a non-reasoning model, have it work through problems methodically rather than jumping to conclusions.

Break complex tasks into multiple prompts. Instead of one giant prompt, use a series of prompts with evals each step of the way. For example, "First, consider my workout plan. Now recommend clothes based on that plan."

Tip #3 is new to me - I haven’t thought about splitting one complex prompt into multiple simple ones before.

Yes, it works great for prompting. When someone asks "What's your return policy?", the AI might append multiple steps: First, read the FAQs. Then list the relevant ones. Finally, format a customer-friendly answer.

How to write effective evals

Ok so we can’t talk about prompting without AI evaluations. Everyone talks about how important AI evals are, but how exactly do you write a great one?

You have a great tip that I think most people don’t realize.