DeepSeek: A Cutting Edge AI Reasoning Model That's Free To Use

A comprehensive guide to DeepSeek's impact and real-world use cases, including how to prompt it and run it locally to protect your data

Dear subscribers,

Today, I want to do a deep dive on DeepSeek.

DeepSeek R1 is an AI reasoning model from China that’s as good as OpenAI O1. It’s also the first AI model that shows you its raw thinking in real-time. In fact:

I find DeepSeek's thinking output more fascinating than its actual answer.

Watching it think (and sometimes struggle) through your problems makes it feel deeply human. So here’s a comprehensive guide on:

Why AI reasoning models are different

How DeepSeek is shaking up the AI industry

The best way to prompt AI reasoning models (PPFO)

5 use cases that reasoning models excel at

How to run DeepSeek locally to protect your data

Course Preview: Using reasoning models as your AI coach

Here's something I've discovered while building my upcoming Become an AI-Powered Product Leader course (March 29-30):

Reasoning models are great AI coaches because they show you the trade-offs they’re making in real-time as they think through your challenges.

But to make them act like a trusted coach, you have to give them the right context. I’ll show you exactly how to do that in my course. 45+ students have signed up and there are still a few spots left.

Why AI reasoning models are different

LLMs like GPT-4o and Claude Sonnet use next-token prediction. They look at your words and predict what word to add, one token at a time. In my seven advanced AI prompt techniques guide, I showed you how to include <thinking> tags to force LLMs to think before presenting an answer.

Reasoning models like OpenAI O1 and DeepSeek R1 include this <thinking> by default. For example, I asked DeepSeek to tell me a joke. Instead of jumping straight to a punchline, I watched it debate what kind of humor would land best. It felt like watching a comedian workshop material in real-time.

This thinking output is what makes DeepSeek seem more human than other LLMs.

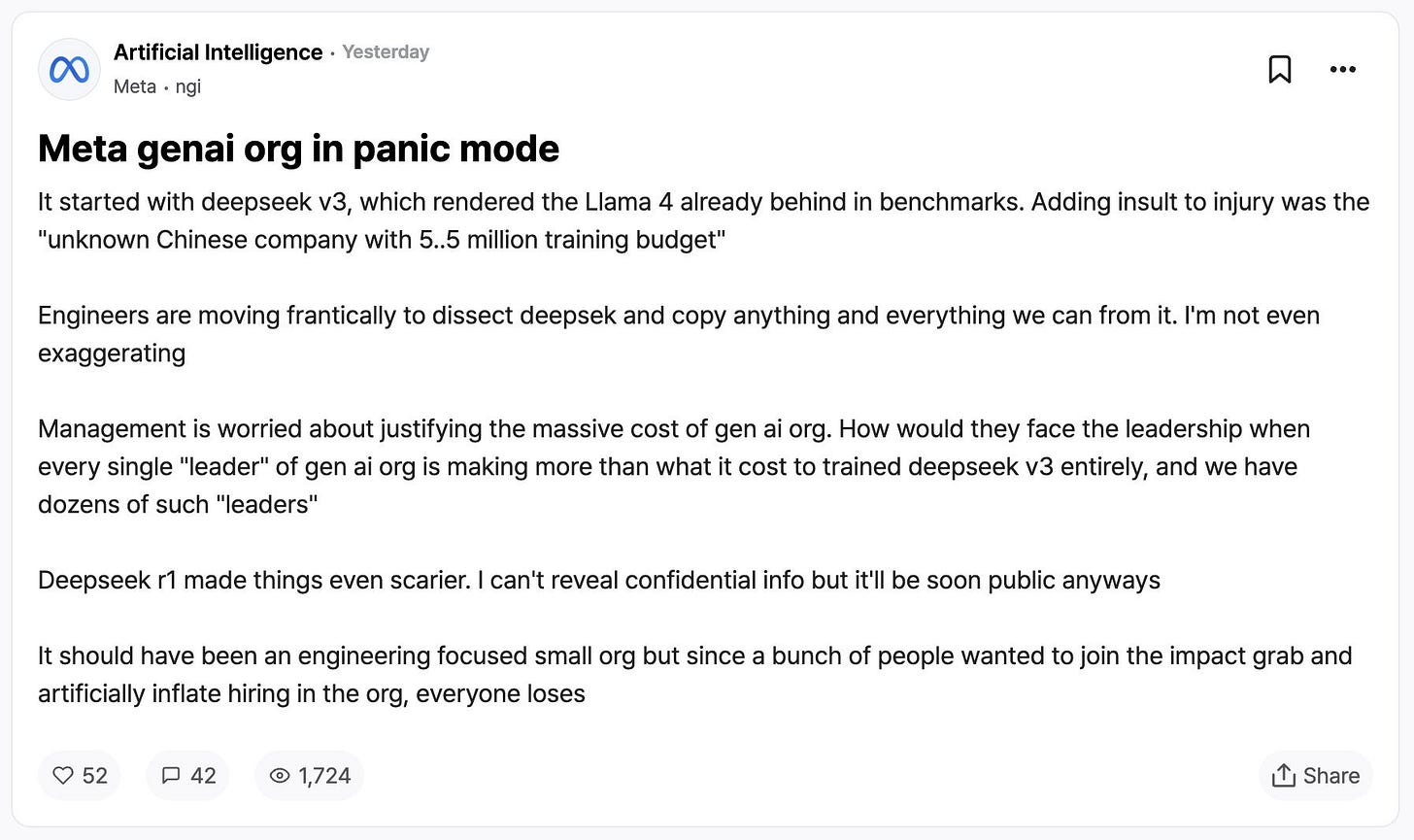

How DeepSeek is shaking up the AI industry

DeepSeek R1 is a game-changer because:

It’s free for consumers and costs a small fraction of OpenAI’s API prices.

It’s open source, allowing developers to customize and deploy it fully.

It was built by a small team in China at a fraction of the usual cost.

The fact that DeepSeek is from China raises obvious concerns. At the end of this post, I’ll share how you can run it locally to protect your data.

But first, let's talk about what this means for the AI industry:

Pretraining is not the bottleneck. The prevailing wisdom was that models would hit a wall when they ran out of internet to train on. But DeepSeek (and OpenAI O1) proved that models could get smarter using reinforcement learning with minimal human feedback (e.g., "thinking more").

Is AI model building a money trap? DeepSeek is free for consumers and costs just 3% of OpenAI’s API prices. If open source is catching up so quickly, companies that sell proprietary model access will likely need to focus on differentiating in other areas.

Value is shifting to the application layer. More model competition is great for AI applications ranging from large players (ChatGPT) to startups (e.g., Granola). The best products will likely use many models and differentiate on UX, proprietary data, and solving customer problems better than horizontal tools and models.

Now, let’s get practical and walk through how to prompt reasoning models, when to use DeepSeek instead of regular LLMs, and how to run the model locally.

The PPFO prompting method for reasoning models

I’ve been using DeepSeek nonstop, and it’s quite different from regular LLMs.

Instead of using <thinking> tags, reasoning models work best if you prompt them with the PPFO (purpose, planning, format, output) method. This method gives these models clear checkpoints to pause and validate their thinking.

Here’s an example PPFO prompt: