Exclusive: Inside the Best AI Model for Coding and Writing | Scott White (Anthropic)

Claude's Head of Product on why Claude 3.7 Sonnet changes everything, how Anthropic builds AI products, and the most essential skills to build in the AI era

Dear subscribers,

Today, I want to share a special episode with Scott White.

Scott is the Head of Product for Claude at Anthropic, and I’m excited to be the first to interview him about the hybrid reasoning AI model Claude 3.7 Sonnet. Scott also gave me a behind-the-scenes look at how Anthropic builds AI products, the most essential skills in the AI era, and how Claude will evolve from assistant to agent.

This is one of my favorite interviews ever and a must-watch for anyone who wants to learn how one of the best AI companies builds products.

Watch now on YouTube, Apple, and Spotify.

This episode is brought to you by Vanta — Join 9,000+ companies like Atlassian and Quora, and use Vanta to manage risk and prove security in real-time. Get $1000 off at vanta.com/peter

Scott and I talked about:

(00:00) How Claude will evolve from assistant to agent

(01:58) Inside Claude 3.7: The first hybrid reasoning AI

(04:51) How Claude became best in class at coding

(08:50) Top 3 ways that Scott uses Claude to build Claude

(13:49) PMs can now design and collapse the talent stack

(17:00) Will AI models become commoditized, and Claude's personality

(22:34) How to work with AI researchers

(27:20) Step by step how Scott builds AI products

(32:37) Why every PM must master writing AI evals

(35:53) If Mike Krieger has brought Instagram's principles to Anthropic

(38:41) How to get hired at Anthropic

(43:01) Flipping the script on Claude's future

An inside look at Claude’s new AI reasoning model

Welcome Scott! So let’s talk about Claude 3.7 Sonnet. What excites you most about it?

Sonnet 3.7 is our first hybrid reasoning model. It can produce both instant responses and step-by-step thinking for more complicated tasks.

It’s similar to how humans think fast and slow.

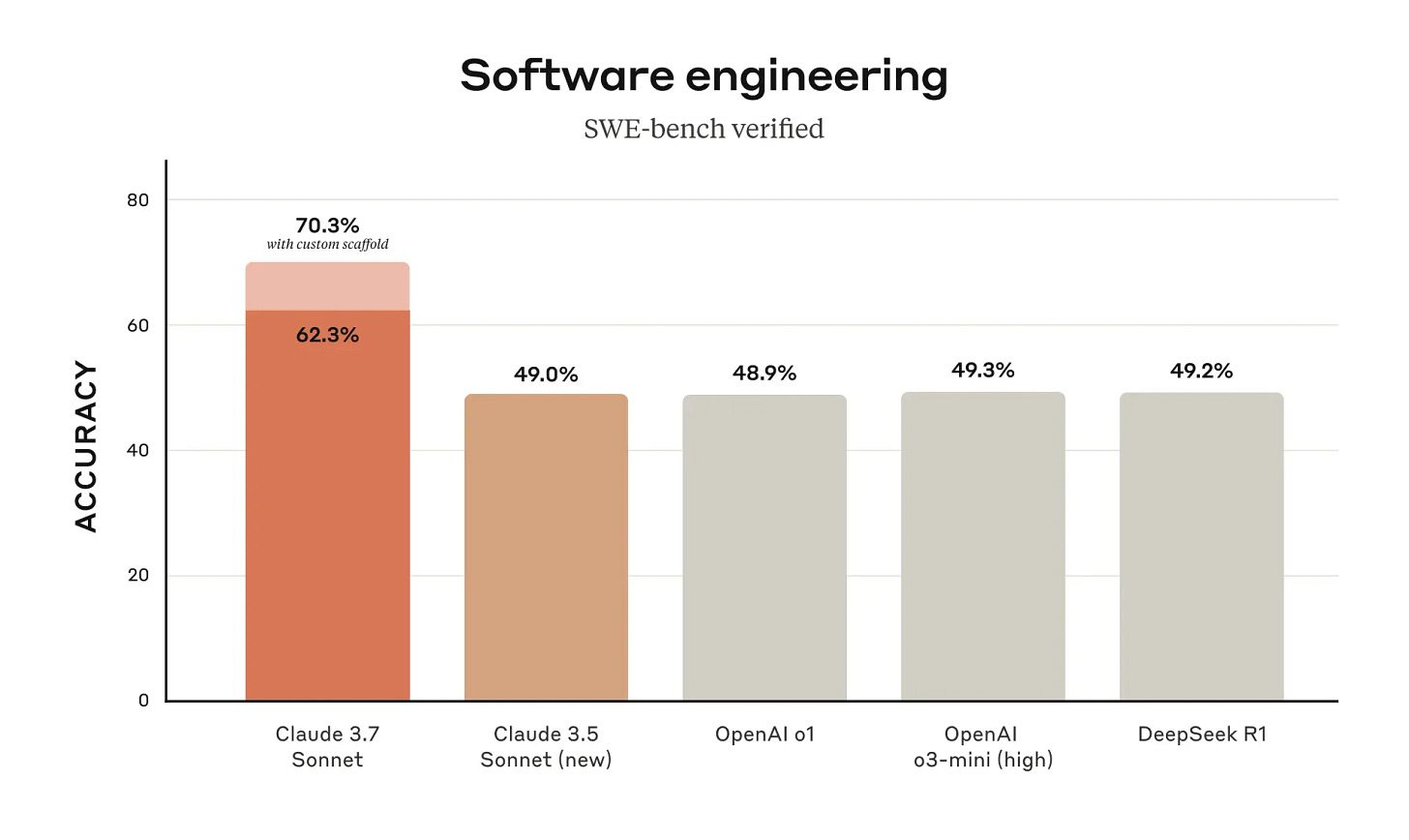

We’ve also optimized it less for math competitions and more for real-world use cases in the workplace. For example, it’s best in class at writing code. Having a great coding model lets us to build better products faster so it’s a virtuous cycle. It’s also surprisingly good at visualizations like mermaid diagrams and prototypes.

Claude is my daily AI driver because 80% of my use cases are writing or coding. How do you train the model to become good at practical use cases?

It's not too dissimilar from traditional product development.

It starts with a strong vision of what we want to be great at—who our target customers are, what problems we want to solve, and the use cases that we want to support.

We’ve built tight feedback loops with customers through Claude.ai and Claude for Work. These loops help us prioritize specific training techniques and the kinds of data and use cases we want to excel at.

We also use these products extensively ourselves.

One of my old bosses, Andy Rachleff (CEO Wealthfront), used to say, "The only thing that matters is if the dogs are eating the dog food."

Eating our dogfood and tight customer feedback loop grounds our model in solving the practical problems that matter.

Using Claude to do product work at Anthropic

So, how do you use Claude to do product work at Anthropic? When do you use normal Claude vs. extended thinking Claude?

I use normal Claude for document writing and summarization. For example, I use it extensively to help me write PRDs, create evals based on the PRDs, and then implement those evals in the product.

I use extended thinking to connect the dots across different document for a large initiative. I typically create a Claude project with the PRDs, evals, and more to do this. Extended thinking is also great for coding. We recently launched an agentic coding tool called Claude Code that’s become integral to how we build products internally.

Finally, I use extended thinking to create visualizations to clarify user flows in product specs. It’s surprisingly good at that. Even engineers are doing this to communicate their vision to me and the designers, which helps close feedback loops on what we want to build.

When you say visualization, do you mean flow charts or actual design prototypes?

Both! We create mermaid diagrams to show what a user flow should look like and full React components to demo a clickable prototype. I've been very surprised at how good Sonnet 3.7 with extended thinking is at creating these.

I do that, too! How does your designer feel about PMs creating prototypes?

I think everyone’s workflows will start overlapping with AI.

We have designers putting out pull requests for quality-of-life improvements, engineers and product people creating visualizations, and even salespeople writing specs because they hear feedback directly from the field.

We're learning to embrace these touch points rather than being defensive. I get inspired when engineers create visualizations. It happened this morning—an engineer put out a compelling mock, and we’ll probably make product decisions based on that later today. These are the new ways of working together that get me excited.

Yes I love how the talent stack is collapsing in the best companies. It’s much more fun that way.

Absolutely. I'm not going to be the production engineer, but I can create a small prototype to get the ball rolling. It's not about being experts at each other’s functions, it’s about breaking through the static friction to start collaborating.

Will AI models become commoditized?

So, some people think that AI models will become commoditized. Do you agree with this take?

Good question. I think businesses are taking a multi-model approach rather than choosing just one. There’s often an orchestration layer that lets companies switch models based on the use case.

For example, many companies adopt Claude for Work or Claude Code for software development while using other models for other use cases.

There's also a cost component—we have a model family with Claude Haiku, Sonnet, and Opus for different performance and cost thresholds. And, of course, we also offer products built on our models like Claude.ai that people love using.

So, I'm not super concerned about model commoditization because there are many vectors of choice and partnership opportunities.

I also love using Claude because it has a nice and thoughtful personality.

Yes, it’s hard to create evals for something like "good vibes," but we take it seriously. Amanda Askell is the genius behind Claude's personality. We steer Claude to have specific traits like curiosity, honesty, open-mindedness, self-awareness, and appreciation of diverse POVs.

It's intangible—like why someone is your friend. You just like them.

Building AI products in close partnership with research

Let’s talk a bit more about the nuances of building AI products. The traditional 3-legged stool is product, design, and engineering. But with AI products, research also plays a major role. How do you work with AI researchers?

Great question. A product team is more like a four-legged chair now.

Research must be there throughout the product development process because we now have agency to change the model.

There's a virtuous loop—the research team helps us solve product problems and we help them improve the model as their customer. There are a few key ingredients to make this loop work:

A shared vision for where our product and company are going—what capabilities we need to make Claude good and safe at. This shared vision helps us prioritize the same bets across product and research.

De-risking research as early as possible by prototyping many products simultaneously. Features like artifacts started as prototypes. Being open to surprises is very important.

Tight feedback loops by having researchers embedded in our product teams. They get exposed to all the customer learnings we’re getting and we get early access to what looks promising on the research side.

All of this requires single-threaded ownership—a researcher who's a product expert who can translate problems into reinforcement learning environments to address failures we're seeing. Having that end-to-end connectivity has been transformational.

Building a new AI product end to end and why every PM needs to learn how to write evals

Let's make this super practical. Can you walk through how you built a recent product from ideation to launch?

Sure, I can talk about Styles, which lets you tailor Claude's output to a specific style—concise, explanatory, or formal.

First, I gathered feedback from the team and customers on what we’re excited about building. Then:

I write a PRD with Claude by pasting the customer feedback into a Project and then dictate the problems I’m solving and the solution I want to build.

The next critical step is writing evaluations. For example, an eval that test Claude’s explanatory style is whether it’s good at being a domain expert who tailors technical explanations to match your knowledge.

I build these evals using actual user preference strings that would appear in the product, paired with prompts that test if Claude correctly solves the specific problem. Then I have Claude evaluate whether it's properly using these preference strings to match my expertise level or format requirements.

This approach transforms user scenarios into a structured evaluation framework that Claude can understand and grade. We keep refining until performance reaches our standards, giving the team specific language to iterate on both the system prompt and model.

Just as traditional software development involves building products, writing tests, and running those tests during deployment, our evals system performs a similar function. When developing a new model or updating our system prompt, we need to verify we're not breaking existing features.

For instance, during the final stages of Claude 3.7's development, we discovered it was breaking the Preferences feature. Thanks to our evals, we caught this before release and made necessary adjustments to ensure compatibility.

So the process is: Take user problems, translate them into use cases, create evals, iterate toward release, then establish those evals as part of a regression set for future development.

Since AI products involve features and models interacting in unpredictable ways, having this common testing ground is essential for quality. These eval skills are becoming critical for product managers building AI products.

Let’s dive a bit deeper since I think evals are critically important. If you were writing an eval for the explanatory style, do you write a ground truth Q&A pair first?

Exactly. For the explanatory style, you might have 10 prompts you'd want that style to handle, like "How does GraphQL work?" or "How big is the galaxy?" You write ground truth answers to these questions to measure accuracy.

You can also write a "grader prompt" where you get Claude to evaluate based on the feature's intent. This works when you don't have ground truth—it's a proxy for quality. Instead of grounding the explanatory style in truth, you could ask Claude, "Is it providing a good explanation based on what it's trying to do?" Or for a concise style, "Is it concise while maintaining clarity and answering the question?” Then you would ask it to grade from 1 to 10. Those grades give you more of a synthetic eval than a ground truth one.

So basically you get the AI to evaluate itself.

Yep, with human oversight. You're writing the prompt and ensuring it's representative of the use case.

I love how PMs can contribute to the actual end user product now because prompts and evals are so critical to the success of AI products. But it’s also really tough because the ground is constantly shifting beneath your feet.

AI needs constant monitoring systems just like software development does. You need to detect when model behavior changes unexpectedly so you can fix issues quickly.

Just as continuous integration and continuous deployment (CI/CD) became standard for engineering with automated testing, we'll develop similar frameworks for AI products. I think there will be a new language for building model-driven products.

Anthropic’s product values and how to get hired

Let’s talk a bit about Anthropic’s product principles and how to get hired. Mike Krieger recently joined as your CPO — I really love his principles around doing simple things first and fewer things better. Has he brought similar values to the product org at Anthropic?

Absolutely. There are lots of areas where he's pushed us to double down and put more wood behind fewer arrows. That sense of pragmatism and practicality in both the model and product comes from that ethos—making Claude indispensable for us because we believe we're indicative of the customers we want to work with.

Coding is a great example of this. Mike's helped us focus on doing things that make us want to use Claude and how that translates to success in the market. He's really accelerated closing the loop between us and our customers.

Yeah, it's a privilege to build a product that you actually enjoy using yourself. Not every PM gets that opportunity.

Yes, and that probably came from Instagram—he was building it and using it every day. Another thing he’s brought is technical leadership.

Having traditionally been an engineering leader, he has a great spirit for bottoms-up energy that comes from engineering and other teams.

We have a great culture of bottoms-up ideation, iteration, and adoption thanks to Mike. I'm constantly surprised by the prototypes and things people build that influence our product roadmap. It makes my life easier because I don't need to come up with all the ideas.

You've got to keep the startup vibe alive. You can't get this big tech situation where the PMs call all the shots.

Absolutely. You're curating vibes, not doing waterfall product development.

So if someone wants to join a product team at Anthropic, how can they get hired? What traits and skills do you look for?

Great question. I'll talk first about the overall pillars for Anthropic generally. Putting our mission first, working independently but also collaboratively, and committing to AI safety as a core value—you should be getting into AI for the right reasons.

I also think you need to be relentless.

We're in a competitive market in a time of rapid evolution, and we need to show up. There's a component of energy, relentlessness, and urgency that's important for any role, especially product management at Anthropic.

The last thing is a one-team mentality. We've talked about how the product development stool is more interconnected than ever, and now it's more like a chair with research being a key part. That makes the one-team vibe and constant feedback loops more important than ever.

For specific product roles, different teams need different skill sets:

For enterprise PM, I'd highlight customer centricity, cross-functional skills, being invested in go-to-market, and the ability to work with sales teams and get excited about customers.

For consumer PM, there's a marketing aspect, data familiarity, and comfort with rapid iteration cycles that you should have experience with or want to develop.

For core model feature development, upskilling on evals is really important—learning that language and framework and understanding how it applies to product development. Evals are an important new skill for all PMs.

I’ve been interviewing alot of company leaders and I think the best PMs just have these core values that match the company. For example, I resonate with company that obssess about customers and care about craft.

Yeah, ultimately if you're doing something that excites you, you're just going to do it better. You're going to want to put yourself into it, do it more, and do an excellent job.

Working someplace where you look at the leadership team, the values, or the mission of what they're trying to do, and it deeply resonates with you—that's going to make your life better. It's going to make work more meaningful to you, and I want my work to feel meaningful.

Yeah, I want to work at a company where I'm not thinking about the career ladder—I just think about how to make this product really good. The company should take care of the career ladder.

Yeah, and I just want to have fun. I want work to be fun with good people. I think about that as well.

What’s coming next for Claude

Last topic—you published the new model with this really awesome chart about Claude’s future. Can you give us a preview of what Claude will evolve to?

Absolutely. You know I’ve been here for over a year and when I started, we were very small and on the Claude II family of models. We had no API, no mobile app, and weren't available in many countries. It's been a crazy 15 months to see Claude adopted at such scale around the world and through businesses.

But I think we're just getting started. Claude right now feels like a capable assistant—you have to know exactly how to prompt it and provide very specific information to get what you want.

In the next year, we're going to flip the script to make Claude more like a capable collaborator.

Can I feel like I can delegate meaningful tasks and work to this capable collaborator, as opposed to telling it exactly what to do, taking the input, and then going elsewhere to complete my process? How can I give the keys to Claude for it to actually take things off my plate? That's what things should start to feel like this year.

It's not just one feature that will do that. It's the confluence of many capabilities, both model and product, all moving in that direction. I think Claude will make meaningful strides in its knowledge, its ability to communicate, and its ability to do stuff for you.

It won't feel like an assistant anymore but something that helps you solve your biggest problems and most pressing needs while meaningfully taking things off your plate.

Yes, it feels like we’re moving towards a future where everyone will be managing AI agents.

Yes, as you start to delegate more tasks to AI with more information, it might come back to you with more questions. I think it'll feel more like collaborating in a one-on-one and via a shared doc with Claude as opposed to just a synchronous chat experience.

Any closing words or advice for people who want to get into AI products?

Try to build stuff to develop your intuition.

Just go try to build something fun, something timely in your life or pressing—a game or something that helps with tasks at home or work. You'll develop intuition for what it feels like to prompt models, realize when what you've built isn't working, and maybe think about evals for it.

Putting the puzzle pieces together by actually building something is the fastest way to gain intuition and learn if you're really excited about this. If you are, there are a lot of cool opportunities out there right now.

Thank you so much Scott! If you enjoyed this interview, follow Scott on LinkedIn and start using Claude today.