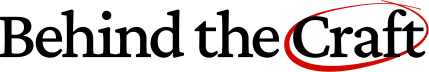

OpenAI Product Leader: The 7-Step Playbook for Defensible AI Products

The complete playbook to pick your moat, design user loops, engineer for cost curves, and build AI products that stick

Dear subscribers,

Today, I want to share a deep dive on how to build defensible AI products.

The graveyard is littered with AI products that got copied, lost their users, and burned through their funding without building moats.

That’s why I’m excited to invite Miqdad (OpenAI product leader) back to share his detailed 7-step playbook to build AI products that stick:

Define the arena where you’ll compete

Design the user loop (not the AI loop)

Pick your moats early

Engineer for cost curves, not features

Build your adoption wedge

Design your feedback flywheel

Scale through systems, not heroics

This is a 4,000-word deep dive taken directly from Miqdad’s AI Product Strategy course (last cohort starts on October 20). Get $550 off the full course with my link below and the first 100 sign-ups will also get a personal strategy review from Miqdad.

Thanks to Glue for sponsoring this newsletter!

Team chat hasn’t changed much in a decade. It’s still cluttered, disconnected, and hard to focus.

Glue is rethinking what chat can be if AI is built right into the conversation. It connects directly to your tools through MCP, so your team can plan, collaborate, and take action without switching context or losing focus. Try agentic team chat below.

The 7-step playbook for defensible AI products

In traditional SaaS, speed to market gave you a real edge. Ship fast, lock in customers, and expand before competitors catch up.

But in AI, speed without defensibility just makes you the first target. So instead of just being first, you need to rely on what compounds uniquely for you:

Data flywheels: Every AI chat generates proprietary data.

Workflow integration: Your AI sits inside core workflows, not on the side.

Trust & governance: In regulated industries, never surprising regulators is a moat.

Iteration speed: The faster you iterate and learn, the further you outrun rivals.

These moats create distance between you and competitors that widens with every new user, dataset, and feedback loop. So let’s cover 7 steps to build an AI strategy that will win the long game.

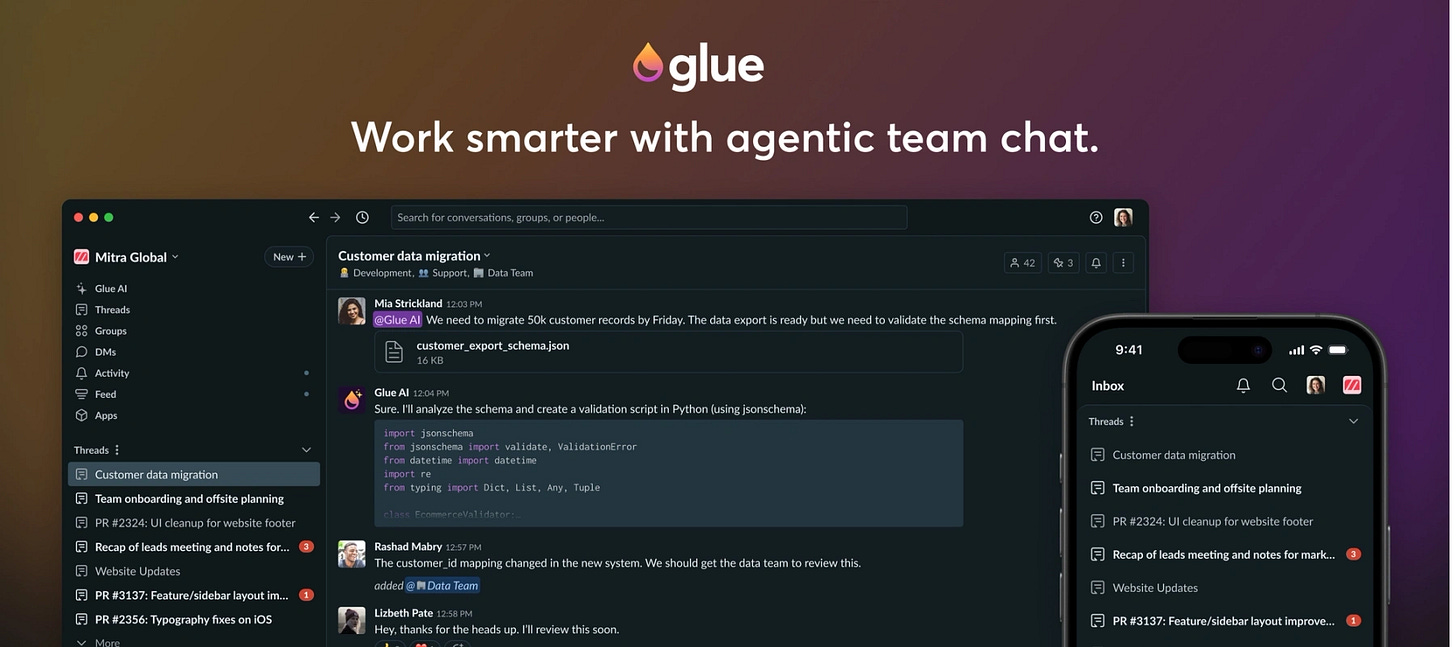

1. Define the arena where you’ll compete

The #1 mistake I see in AI product strategy is when leaders target a broad market instead of a specific arena.

I’m sure you’ve heard leaders make broad claims like “We’re building AI for healthcare / e-commerce / education.” Instead, defensibility comes from focus.

3 steps to choose your arena

Run your desired arena through three filters:

High-frequency pain: Pick an arena where the problem happens constantly, not occasionally. “This wastes me 30 minutes a day” beats “this is annoying once a quarter.” Defensibility comes from embedding within daily workflows.

Data exhaust: Does solving this problem generate data that improves the solution over time? Exhaust is structured, high-signal data created from every use. Without it, scale adds cost. With it, scale becomes a flywheel.

Adjacency potential: Your arena is your beachhead. Pick a starting point that opens doors to adjacent workflows. Mastery of one narrow problem gives you credibility, trust, and data to unlock the next two or three.

The arena test

When in doubt, use this arena test:

If your arena definition is under 5 words (e.g., “AI for marketing”), it’s too broad.

If your arena can’t be explained with a single example of who struggles, when, and why, it’s too abstract.

If your arena doesn’t naturally generate proprietary data, it’s not defensible.

Example: Clay

Clay launched as an AI-powered relationship intelligence instead of “AI for sales.” They pulled together fragmented contact data across tools (LinkedIn, email, CRMs) into a living system of record. By solving a high-frequency pain for sales, Clay (now valued at $3.1B) created a data advantage that compounded over time.

The most common mistakes in choosing your arena

Chasing TAM too early. Leaders say, “But if we do AI for e-commerce, that’s a $10B market!” Yes, but you’ll die in the noise. TAM is a lagging metric. Start with the most acute, winnable wedge.

Copying competitors. If your arena is defined by what’s trending on X (like “agentic AI workflows”) you’re already too late. By the time you see it, a dozen startups have already shipped it.

Mistaking workflow adjacency for customer adjacency. Just because doctors need insurance letters and diagnostic notes doesn’t mean you should do both. One is a high-frequency admin burden (insurance letters). The other is a regulated, life-critical task (diagnoses). Pick one arena to focus on first.

Don’t move forward until you define your arena clearly. Get it wrong, and every step after is a house built on sand.

2. Design the user loop (not the AI loop)

One of the biggest traps is obsessing over the AI loop instead of the user loop.

You’ve probably spent hours refining prompts and tweaking evals — after all, they’re the engine of your AI product. But users don’t live in your engine room. They don’t care that you improved your eval scores by 2%.

Users care about one thing: Did my life get easier, faster, or better in an obvious way?

Defensibility begins when you stop designing for the AI loop (prompt → inference → eval) and start designing for the user loop.

What the user loop looks like

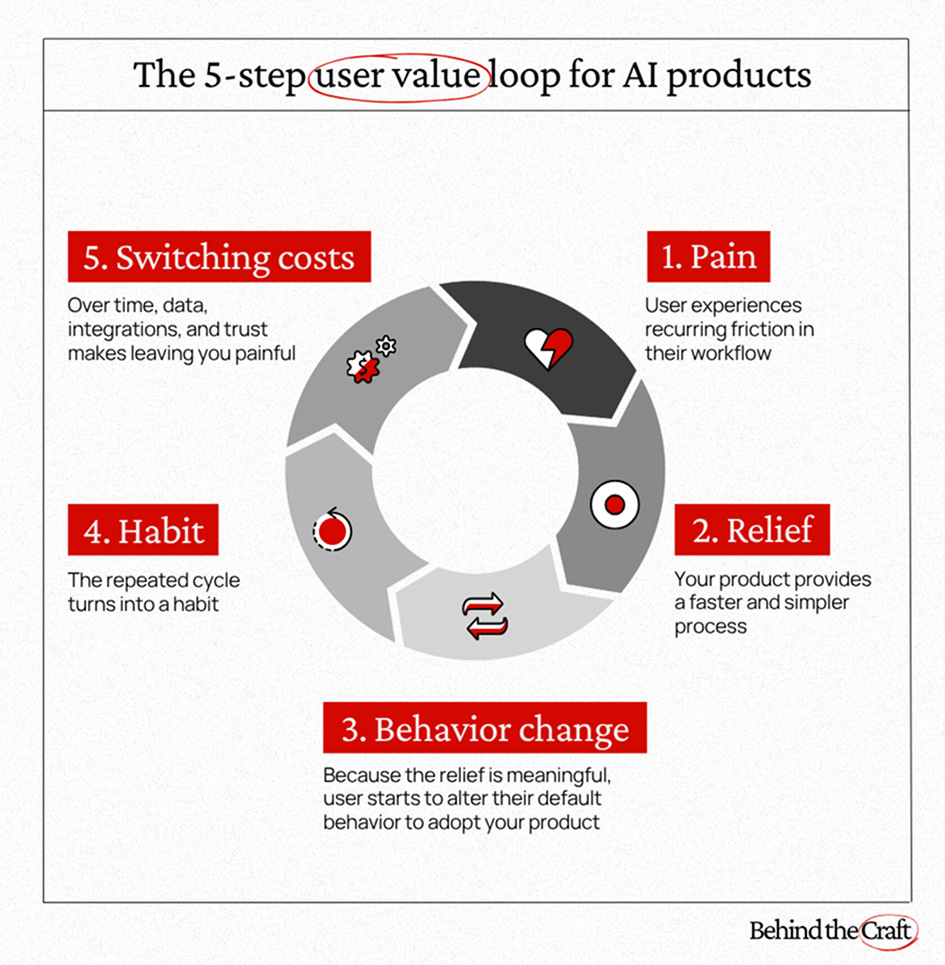

Here’s how the loop works:

Pain: The user experiences a recurring friction in their workflow.

Relief: Your product provides a faster, simpler, or more accurate process.

Behavior change: Because the relief is meaningful, the user starts to alter their default behavior to adopt your product.

Habit formation: The repeated cycle turns into a habit.

Switching costs: Over time, the data, integrations, and trust that you’ve built into this loop make leaving you painful. That’s your moat.

Unlike AI loops, user loops are sticky

Today’s “state-of-the-art” AI loops become tomorrow’s baseline. A rival can always replicate your prompts or swap in a better model.

User loops are what drives retention. Once you’ve rewired user behavior around your solution, you’ve created inertia. And inertia is the strongest form of defensibility in AI.

Think of Slack. Was its defensibility really “a chat app”? No. It was its user loop: Team messages → Instant collaboration → Teams stop emailing → Email declines → Slack becomes the default.

Once Slack’s user loop kicked in, competitors weren’t fighting a chat feature. They were fighting a habit.

Example: Granola

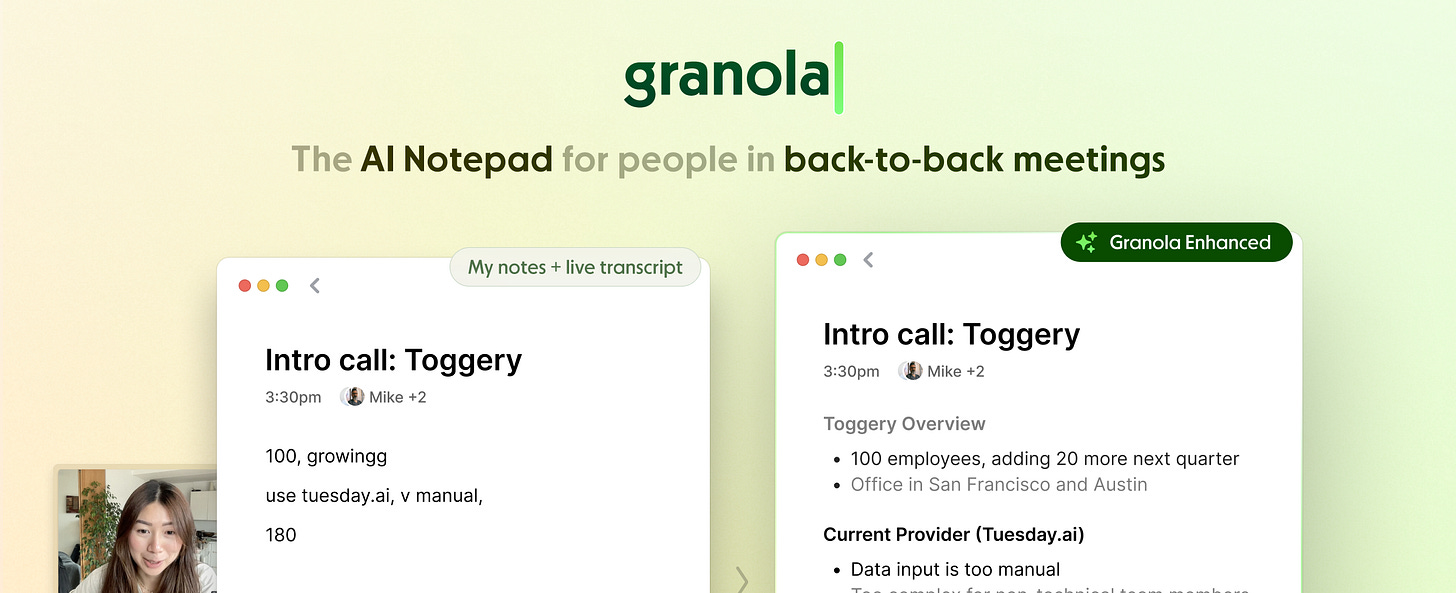

Granola is an AI notepad for meetings. The obvious pitch could have been “AI transcription,” but transcription alone is easy to copy and doesn’t create stickiness. Instead, Granola designed a strong user loop:

Pain: Professionals waste hours every week re-reading meeting transcripts, cleaning up notes, and tracking action items.

Relief: Granola automatically produces clean notes from meetings.

Behavior change: Users stop taking manual notes and rely on Granola.

Habit: Every meeting is funneled through Granola, making it a daily workflow.

Switching cost: Over time, Granola becomes the system of record for meeting history and structured action items - data no competitor can easily replicate.

Granola shows that defensibility doesn’t come from the AI model itself. It comes from designing loops where pain turns into relief, relief changes behavior, behavior forms habits, and habits generate data that compounds into a moat.

4 key user loop principles

Anchor on relief, not “wow”: Cool demos get attention. But only repeatable relief builds loops. Ask: What’s the 30-minute pain I can cut to 3 minutes?

Make relief visible: Users often forget the pain once it’s solved. Remind them. “You saved 22 minutes this week.” Visibility reinforces behavior change.

Tighten the feedback cycle: The shorter the cycle between pain and relief, the faster the habit forms. Daily loops beat weekly loops; weekly beats monthly.

Close the emotional loop: Relief is emotional. Users want to feel smarter, faster, or more in control. The best AI products reward them with both outcomes and identity reinforcement.

The user loop test

When in doubt, use the user loop test:

Does my product relieve a pain the user feels at least weekly?

Is the relief visible and measurable?

Does the relief cause users to change behavior (not just click once)?

Can the loop generate switching costs over time (data, habits, trust)?

If the answer to any of these is “no,” you don’t have a defensible product yet.

3. Pick your moat ingredients early

The brutal truth is that in AI, features are disposable.

Anyone can copy your prompt template or wrap a model in a slick UI.

What they can’t copy overnight are the moat ingredients you bake into your product from the very beginning.

Too many leaders think of moats as something you add later once you scale. By then, it’s too late. In AI, you build your product with your desired moat in mind.

3 moats that actually work

After a decade working on AI products, I’ve found there are only 3 moats that matter: