15 Hot Takes That Cut Through the AI Hype

What I really think after 2 years using AI and talking to 100+ AI leaders

Dear subscribers,

Today, I want to share 15 hot takes that cut through the AI hype.

I posted these takes to social and they really hit a nerve because I think they say out loud what many of us are thinking.

So let’s cover three areas where I think AI needs a reality check:

Cutting through the AI hype

Building useful AI products

Building AI native teams

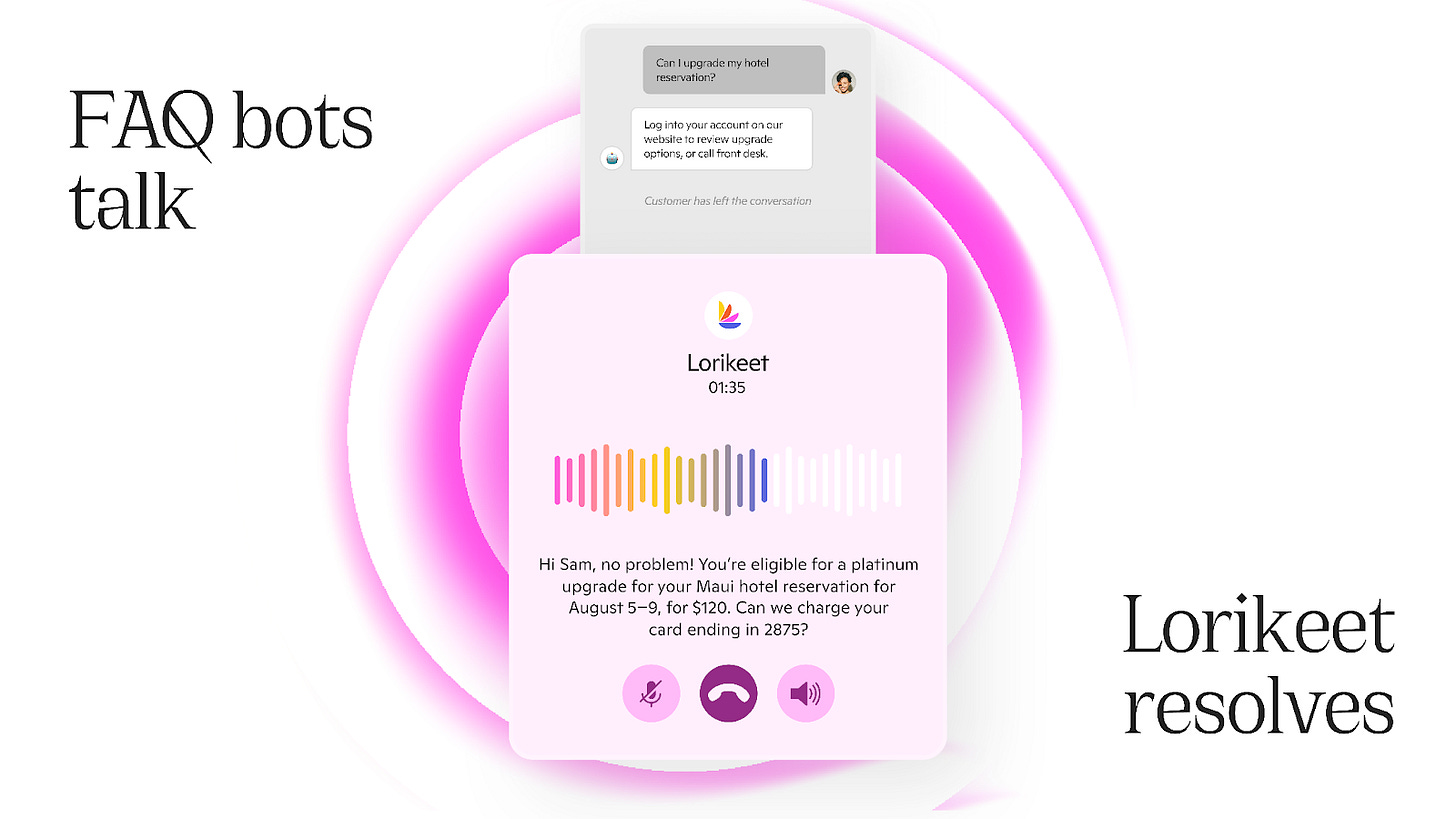

Thanks to Lorikeet for sponsoring today’s newsletter!

One of my pet peeves is being put on hold for 45 minutes to reach customer support.

Lorikeet is a universal AI concierge that works across chat, email, and voice 24/7. It understands your systems, policies, and workflows to solve customer issues end-to-end with 99% accuracy and loops in human support when needed. Lorikeet will even buy out your existing support contract if you upgrade by October 31st.

Cutting through the AI hype

The term "AI PM" has become a meaningless branding exercise. Everyone’s an “AI PM” on LinkedIn these days. But slapping “AI PM” on your resume doesn’t make you one. What matters more is showing proof of work — what did you actually ship with AI recently and does it solve a real customer problem?

Any AI influencer who posts “(product) is dead” should go on your block list. I’ve blocked at least a dozen engagement farmers who post nonsense like “this changes everything” or “RIP (product).” Ignore these people and follow folks who are building and sharing real lessons instead like Lee (Cursor), Kieran (Every), Matt (Replit), and Cat / Meaghan (Claude Code).

Retention is the real signal, not ARR. Despite billions of dollars being poured into AI, I only use a handful of AI tools regularly (see above). Good retention is what matters, and it’s incredibly rare in the AI space. Founders and investors who only chase ARR numbers while ignoring retention are in for a rude awakening.

If you're an AI founder, remember that VCs have many bets in their portfolio. But you only have one business — so focus on retention, grow it sustainably, and don't play the ARR game.

Reading and understanding code matters more than ever. Despite what I want to believe, I simply cannot vibe code anything as good as a real engineer. AI can help with writing code, but understanding how software actually works remains essential. I asked my audience: "If you had to teach a busy person to become technical fast, how would you do it today?" This reply nailed it:

Building useful AI products

AI agents often aren’t the right solution. Every startup I talk to is building an AI agent. But agents are expensive (they eat tokens for breakfast) and only make sense for complex, valuable tasks. The most valuable agent tasks — coding, research, productivity — also face intense competition from top AI labs. Can you really build another AI coding agent that’s better than OpenAI and Anthropic?

A decision tree to figure out if you should really build an AI agent

Use AI to solve the boring, manual workflows instead. The best AI products aren’t behind a ✨ button. Instead, they streamline the core workflows that people already have. Where are your users manually entering data or copying and pasting between tools? That's where the opportunities are. Read my guide to help you identify the right AI use cases.

Consumer vibe coding isn't a sustainable business. Nobody wakes up thinking: “What new apps can I vibe code from scratch today?” That’s why the best AI coding companies (e.g., Cursor) are targeting enterprises and engineers instead. Maybe there’s an opportunity to disrupt website builders like SquareSpace.

Always build the simple thing first. Trying to build fancy agents and RAG systems when simple prompting works is a recipe for failure and frustration. Always do the simple thing first and don't solve problems that won't be problems soon when a better AI model comes along.

Shiny demos without evals are just vaporware. I’ve seen many people present flashy demos only to stumble when asked about evaluations (including some lessons I learned the hard way!). When demoing your AI product, you should have clear answers to: What user problem are you solving? What ground-truth dataset and evaluations do you have? How have you prepared for the model to fail?

The most important part of evals is the human part. Getting your team aligned on what the right eval criteria is and what good looks like is the foundation of all your evals. Try to avoid using off the shelf criteria like “helpfulness” and tailor your evals to measure real user problems instead.

Match your eval rigor to your product stage. Trying to do fancy LLM judge evals in the early days when your product is still changing is equivalent to writing a 30 page PRD before you build the product. Start with a few dozen test cases when you’re still prototyping and scale to robust evals when you’re ready to launch.

Building AI native teams

Rapid feedback loops are everything. In my interview with Cat (Claude Code), she shared that her best features came from launching prototypes to 1,000+ users and then actually listening to what resonates. Everyone, from engineers to designers to PMs, should feel empowered to prototype and build feedback loops.

Stop pretending you have a 2 year roadmap. When I asked Cat about her long-term roadmap, she said: “A year or two is a really long time. I can talk about the next few months.” The best AI teams aren’t planning for more than 6 months out. This doesn’t mean that they’re not strategic, they just know that things will change and don’t want to waste time on planning theater.

Stop consuming and start building. The best way to learn AI is use it to solve your own problems. When you get stuck, ask AI for help. Remember that you can just do things instead of waiting for someone to explain to you how things work.

Be wary of AI making you lazy. I'd like to think AI makes me smarter, but the truth is that it's also made me lazy. If I have a problem and don't have Claude, ChatGPT, or Gemini available, I just think "Screw it, I'll think about this later with AI's help." Be wary of AI making you intellectually lazy.

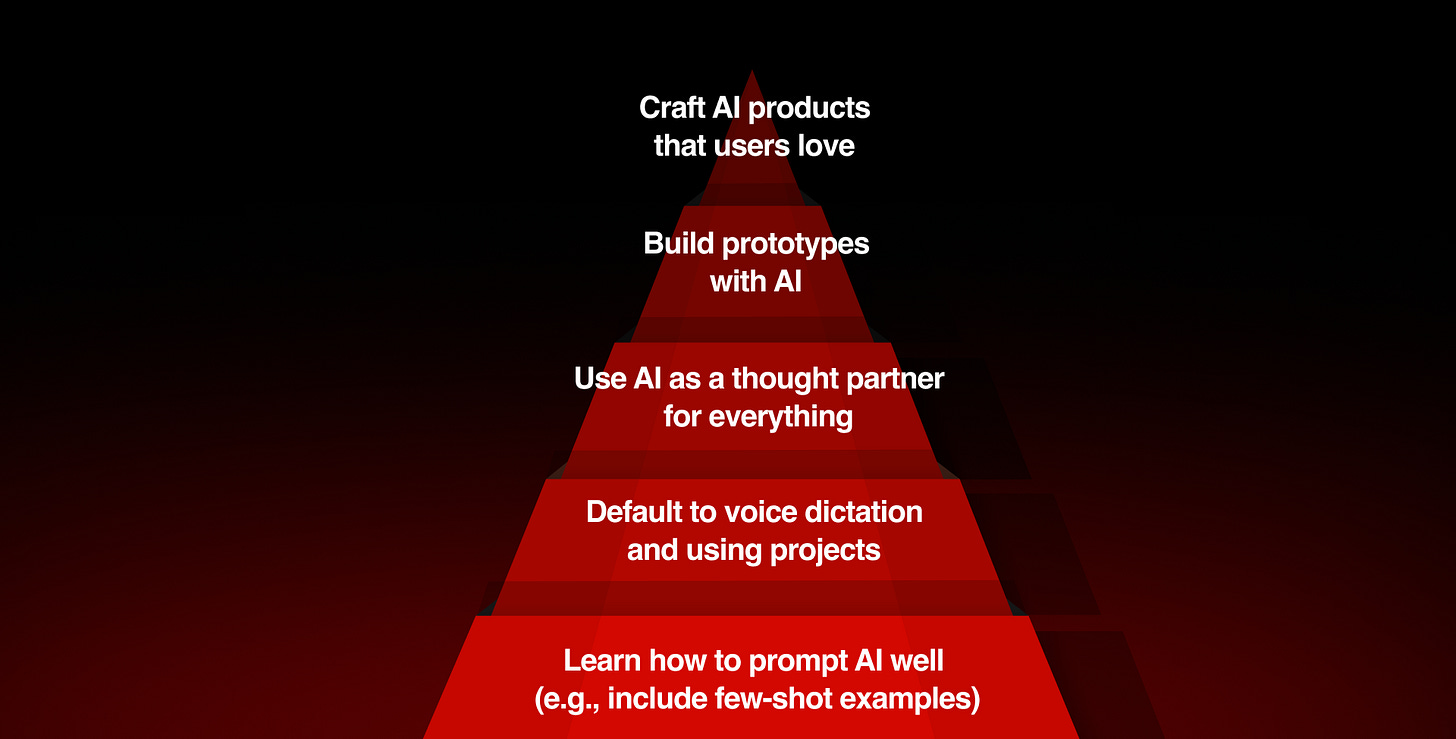

Become AI native in the right order. You won’t be great at prototyping if you don’t know how to prompt well and you won’t know how to build great AI products if you don’t use AI tools natively in your own workflows. Follow the 5 steps below and check out my detailed AI learning path for more.

See my detailed AI learning path with links to bite-sized guides for each step

Learn to separate the signal from the noise

Let’s face it, there’s a lot of noise in the AI space.

For me, the best signal comes from talking to real AI builders (which is why I do the podcast) and working with AI to solve my own problems.

What AI takes do you wish more people would say out loud? I’d love to hear your thoughts in the comments.

Relevant read. Nowadays, Definitely many engineers are in some way a victim of the meme.

Word.