The AI Skill That Will Define Your PM Career in 2025 | Aman Khan (Arize)

Why every product manager needs to write AI evals and how to get started now

Dear subscribers,

Today, I want to share a new interview with Aman Khan.

Aman is the Director of Product at Arize AI. Last year, we both heard the Chief Product Officers of OpenAI and Anthropic share that AI evaluations will be the most important skill for PMs in 2025. Aman gave me a crash course on how to build this critical skill in our new interview.

Watch now on YouTube, Apple, and Spotify.

Aman and I talked about:

(00:00) Evals force you to get into your user's shoes

(03:42) 5 skills to build right now to become a great AI PM

(07:43) How curiosity leads to better AI products

(10:20) You can build AI without the job title

(17:00) A deep dive into why AI evals are so important

(24:53) Example of running evals for an AI customer support agent

(31:32) When humans need to be in the loop for evals

(35:46) How to get better at writing evals right now

(39:39) My personal prompt and eval for transcripts

(44:23) Catching the AI wave is alot like surfing

P.S., If you’d like to dig deeper after this interview, Arize also published their Definitive Guide on AI Evals that’s worth checking out.

5 skills to become a great AI PM (even if you don’t have the title!)

Welcome Aman! So, everyone's talking about becoming an AI PM these days. What key skills should someone build to become an AI PM?

An AI PM is anyone who’s building products with or around AI. I believe long-term:

All PMs will be AI PMs.

You'll either build on top of LLM APIs, use models in your backend, or use AI tools to solve problems in your daily work.

Here are the five key skills to focus on:

Start with the fundamentals. Understand the high-level areas of AI and ML used in today's industry. Use resources like Andrei Karpathy's talks and Marily’s algorithm map.

Customer obsession: Use your understanding of AI capabilities to solve real customer problems.

Curiosity to learn and prototype: Try new AI tools to understand what’s possible.

Learn from great AI experiences: Study what works in AI products.

Understanding evals and observability. Measure how well your AI is performing.

If I had to put it in one sentence:

Learn to ship high-quality AI products in the real world, gain experience, adopt best practices, and then measure their impact.

I think the curiosity part is particularly important. Many big company PMs lack that curiosity because they're too busy writing slide decks and making docs.

Exactly. Imagine showing up to a meeting with a prototype instead of a PRD. That's the shift PMs will need to make to communicate better in this new AI world.

It's important to emphasize that you don't need PM as a job title to start working on these skills. AI has arguably made it easier to ship stuff yourself than to get buy-in as a PM.

Yes, it's funny – people always say, "The PM is the CEO of the product," but I think that's never been true until now. With these new AI superpowers, you can write marketing copy and test it, build a quick website, and get things done without waiting for the engineering team.

When people think of AI products, they think of chatbots. But many good AI products instead focus on fixing tiny friction points in core workflows. What have been some of your favorite AI tools in the market?

Lately, I've been obsessed with prototyping tools and coding agents.

For example, we were in a meeting discussing the need to ship a dashboard for a customer to track enterprise-wide product usage. After debating putting an engineer on it for several weeks, I opened Replit and typed in a prompt. I then copied in our API docs and asked it to build a usage dashboard. By the end of the meeting, we had something working we could look at.

I've also had great experiences with other tools. Recently, I needed to refresh my website. I spent nights and weekends working on React when I first built it in college. Now, with Vercel's V0 tool, you can build beautiful front ends by dropping in images and quickly getting functional prototypes.

Another interesting tool I've been experimenting with is Claude's writing style. You can give it a sample of your writing, and it will try to mimic your style. It's not perfect, but it helps with the cold start problem.

I've also been asking Claude to edit my writing using my best writing samples. It works well.

Exactly. The skill becomes about getting the right output and learning how to prompt it effectively.

The AI prototyping tools are interesting. They're good for making toy apps, proof of concepts, and maybe personal websites. However, they haven't reached the point where you can make and sell a SaaS product online.

On YouTube, you'll find tons of tutorials showing people building SaaS apps in 10 minutes using tools like Cursor or V0—some claim they're building entire companies this way. I'm not sure if that’s true today, but I think it’s only a matter of time.

I love how these AI tools could reduce the time PMs waste. Even getting a PM job requires case interviews on hypotheticals, and then you spend time writing documents and doing reviews—none of which are about the product you're trying to ship. I hope AI tools help shift attention to delivering real value to customers.

I think we're getting closer to that. At a recent conference, Claire Vo gave a great talk showing Venn diagrams of functional areas and jobs. I believe that specific roles will become less important while skills and tool usage will matter more. Soon, it won't just be about writing documents but about solving end-user problems.

Why writing evals is the most important skill for PMs to build in 2025

Let's go deep on evals now. What exactly is an eval, and why is the most important skill for PMs to build in the AI era?

Let me frame this in the context of a product. At its simplest, imagine a diagram in which a product is a box in the middle with an input and output. The input might be a customer trying to solve a problem, and the output might be the solution.

For example, say someone visits a website wanting to book a trip to San Francisco. The input is their request, and the output is booking their trip. While there are many steps in between, the input and output matter at the end of the day.

Evals are how we measure how good or bad that middle box – the product – is performing.

In traditional software development, systems were deterministic—you could check whether APIs were being called correctly or the code was bug-free. But with LLMs, we're dealing with statistical systems that have inherent variability.

This highlights why we need evals to measure LLM performance. At a high level, evaluations combine your data, method, and metrics to analyze the system as a whole.

How do you get started learning how to write evals?

It's about how well your product solves the user problem.

Previously, users only had one input type, like clicking a button. Now, they have thousands of different inputs. These systems have become multimodal with voice input, and the data becomes much richer for debugging with LLMs. Users can tell you their problems through chat while also clicking buttons.

Where do evaluations fit in the AI product development process?

Think of it as training to become a doctor.

The evaluation is like the test you give the doctor before they start seeing patients. You want to make sure the system understands what it's getting into. Before it goes into production, you're trying to solve technical and scientific problems. The evaluation is at the earliest step, like a medical exam representing the real world.

How do evaluations compare to A/B testing?

Evals are like a new kind of A/B test.

Before, you'd have to split your audience and get into production to see what people prefer. Evals are how you do that testing with LLM-based systems earlier in the process.

So why is writing evaluations so challenging?

Evals force you to into the shoes of your user.

You can no longer just hypothesize what they might do - you must articulate that understanding in writing. There's also a high level of subjectivity and non-determinism that makes it challenging.

In self-driving, humans evaluate whether an AI makes good left turns. Some people would say a turn is too fast, others too slow. When you add variables like someone crossing the sidewalk, it gets very nuanced. This relates to the LLM world—judging whether an AI writes a good sentence depends heavily on context.

Understanding the clusters of scenarios your system will face and breaking them into components makes writing evaluations easier. You're iterating in a tighter loop.

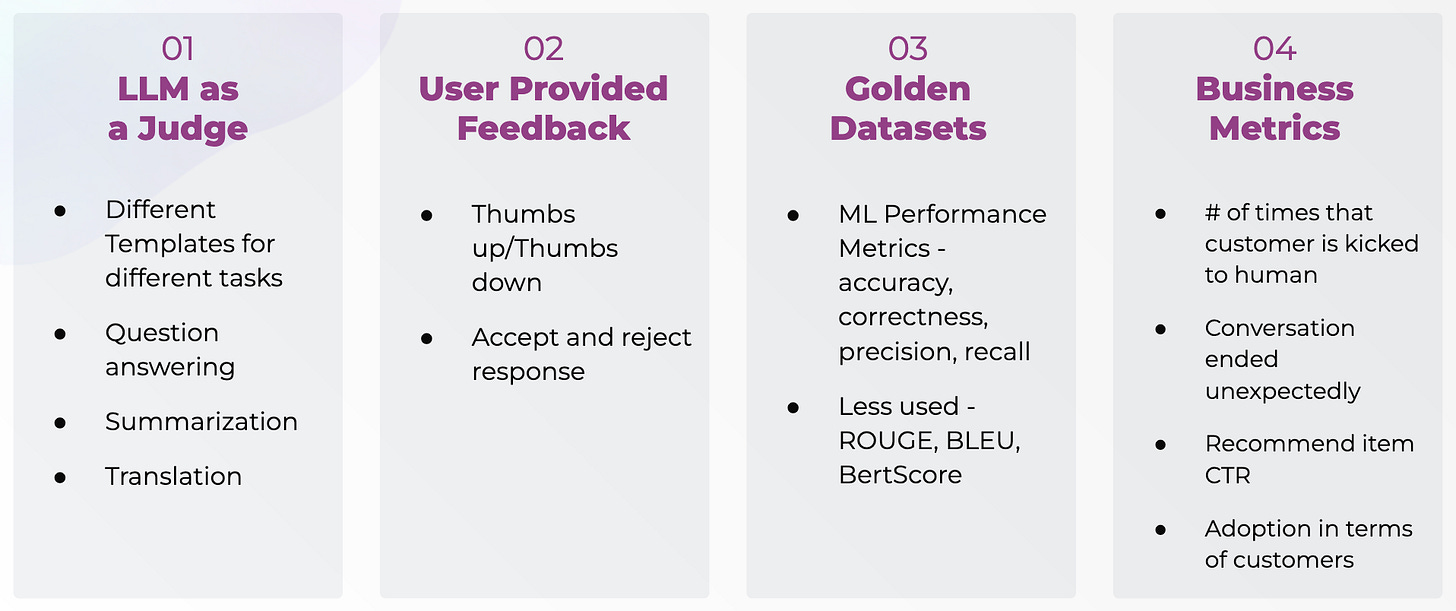

The different types of eval and when to use each one

Let’s use a real example to bring this to life. How would you evaluate an AI customer support agent?

Start with existing data by uploading your return policies and past customer support conversations. Use this to write ground-truth answers for common scenarios and implement basic filters for harmful content.

Use LLMs to expand your test data by generating more scenarios based on your real examples. You can have one LLM create test cases and another evaluate responses.

Test with real users through A/B testing, sending a small percentage of traffic to the AI system while comparing against human agents. This validates if your experimental results match real-world performance.

Measure key metrics, including accuracy (following rules correctly), hallucination detection (responding accurately with given context), and overall tone and sentiment. Different use cases may require specific additional metrics.

You can also check out Arize’s full guide for evals.

How do you evaluate subjective aspects like tone?

Humans need to be in the loop for initial evaluation because you're solving problems for real humans. You need humans to look at the inputs and outputs before you can start handing that off to another LLM to do it for you.

How do you run evals for a complex AI system that uses prompting, retrieval-augmented generation (RAG), and other techniques?

You want to break up the problem into smaller problems and components.

Think of it like building a Lego structure - it won't be one big block. You want to break down your system to evaluate each component in its iterative loop. This principle comes from software engineering, where you have unit tests at individual granular levels.

You should be able to iterate across your prompt, retrieval, and model to see which changes have the biggest impact. The key is having a tool that helps you iterate with all these parameters rather than assuming which part needs updating.

How important is the speed of iteration when building AI products?

It’s super important.

You don't know what will work until you make the change. Sometimes, we think a problem will be incredibly difficult, but adding one line to the prompt completely solves it. You never know until you try, so you must iterate quickly.

Does this mean that the traditional waterfall process is dead?

Yeah, I think the waterfall process of writing a PRD and handing it to designers and engineers no longer works. The development cycle needs to be much shorter, like clock cycles in a GPU. You need constant iterations and improvements.

How can someone get better at writing evaluations without an ML background?

At its core, an eval is just another prompt.

For example, you can write: "You are the best customer support agent in the world, and your job is to check the work of this other agent." You've created a judge LLM. But there are some key considerations:

Focus on specific outputs rather than responses. Instead of getting essays, enforce simple outputs like "correct" or "incorrect."

Make evaluations fast by using the right models and multi-threading.

Include examples in your prompts that show what correct answers look like.

Don't optimize costs too early - focus on getting the best results with a state of the art model before worrying about model costs.

How does Arise help with evaluations?

Arise supports AI development at three levels:

Free education and handbooks to help you get started

Open source tools like Phoenix for writing evals and monitoring products

Enterprise products for mature teams needing security and scale

The open-source tools are available at phoenix.arise.com, with a Slack community for support. We aim to help more people build better AI systems from different perspectives.

Any closing words of advice for folks who want to go deep on AI PM?

In Silicon Valley, people feel they need to pivot their careers quickly and get that "AI PM" title on LinkedIn. But I think of it like surfing—success comes from being in the right position before the wave hits. The AI wave is still early, so there's plenty of room for different perspectives and approaches. What the ecosystem needs right now is more people bringing their unique approaches to building with AI.

It sounds like getting in the right position isn't about taking many AI courses or following a playbook - it's about trying things yourself.

Definitely. The key is to:

Try things out yourself

Join the right communities

Connect with people building AI products

Learn from others and let them learn from you

Thank you, Aman. If you enjoyed this interview, please follow Aman on LinkedIn and check out my guide on AI evaluations.

You can also check out: